Robotic Vision with Refractive Objects

- We describe a new kind of feature, the RLFF, that exists in the patterns of light refracted through objects

- We propose efficient methods for detecting and extracting RLFF features from LF imagery

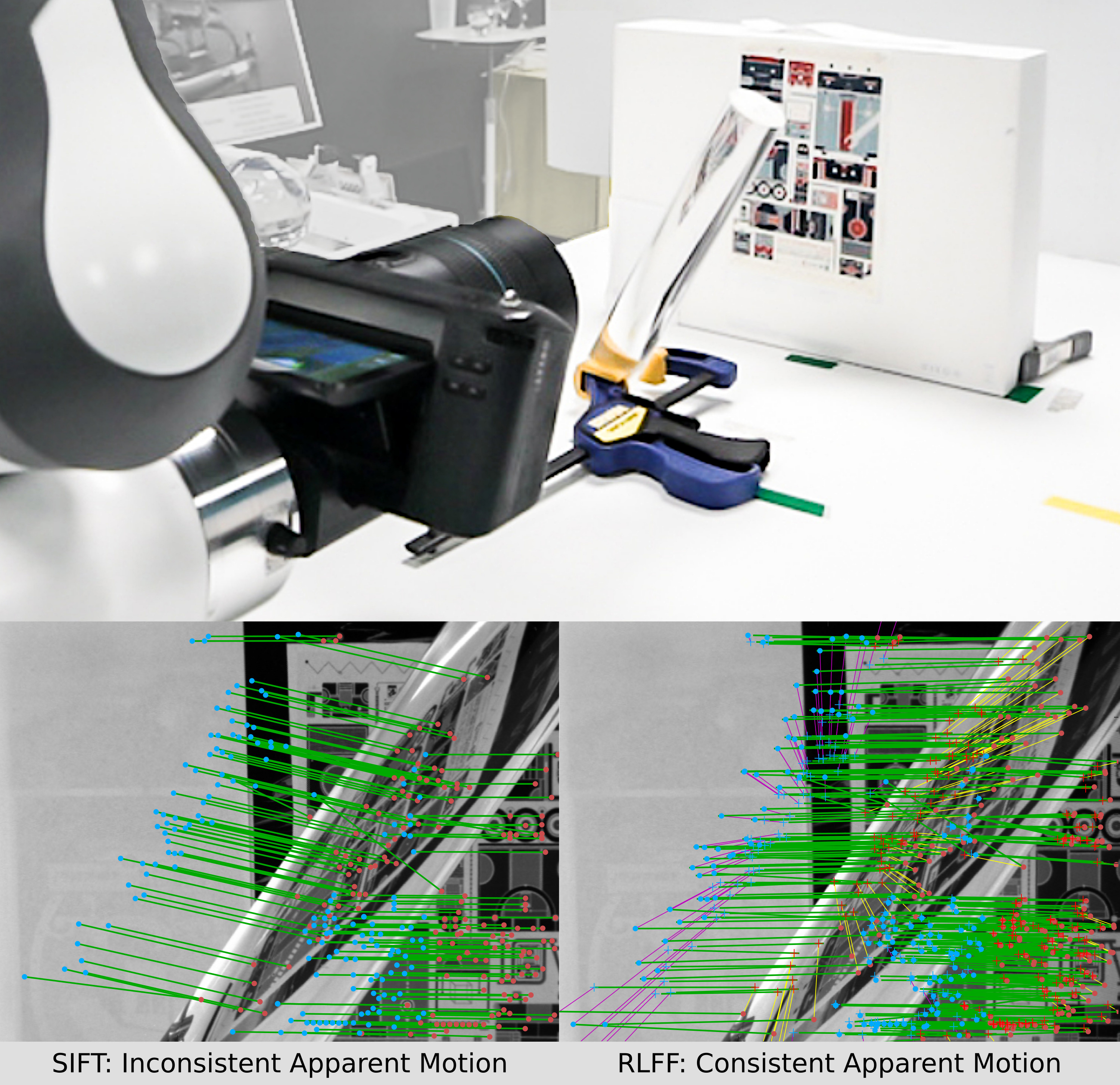

- RLFF can be used in place of conventional features like SIFT, and improves SfM performance in scenes dominated by refractive objects

- We show more accurate camera trajectory estimates, 3D reconstructions, and more robust convergence, even in complex scenes where state-of-the-art methods fail

RLFF advances robotic vision around refractive objects, with applications in manufacturing, quality assurance, pick-and-place, and domestic robotics around glass and other transparent materials.

Publications

[1] D. Tsai, P. Corke, T. Peynot, and D. G. Dansereau, “Refractive light-field features for curved transparent objects in structure from motion,” IEEE Robotics and Automation Letters (RA-L, IROS), 2021. Available here.

[2] D. Tsai, D. G. Dansereau, T. Peynot, and P. Corke, “Distinguishing refracted features using light field cameras with application to structure from motion,” IEEE Robotics and Automation Letters (RA-L, ICRA), vol. 4, no. 2, pp. 177–184, Apr. 2019. Available here.

Collaborators

Acknowledgments

Downloads

The dataset used in the RLFF paper is here (16GByte download).

The dataset was captured with a robotic arm-mounted Lytro Illum, and contains 218 LFs of 20 challenging scenes with a variety of refractive and Lambertian objects. The dataset includes the raw LFs and known camera trajectories.

Gallery

(click to enlarge)

These observations also apply to any point identifiable using a 2D feature, e.g. Harris corners or SIFT features.

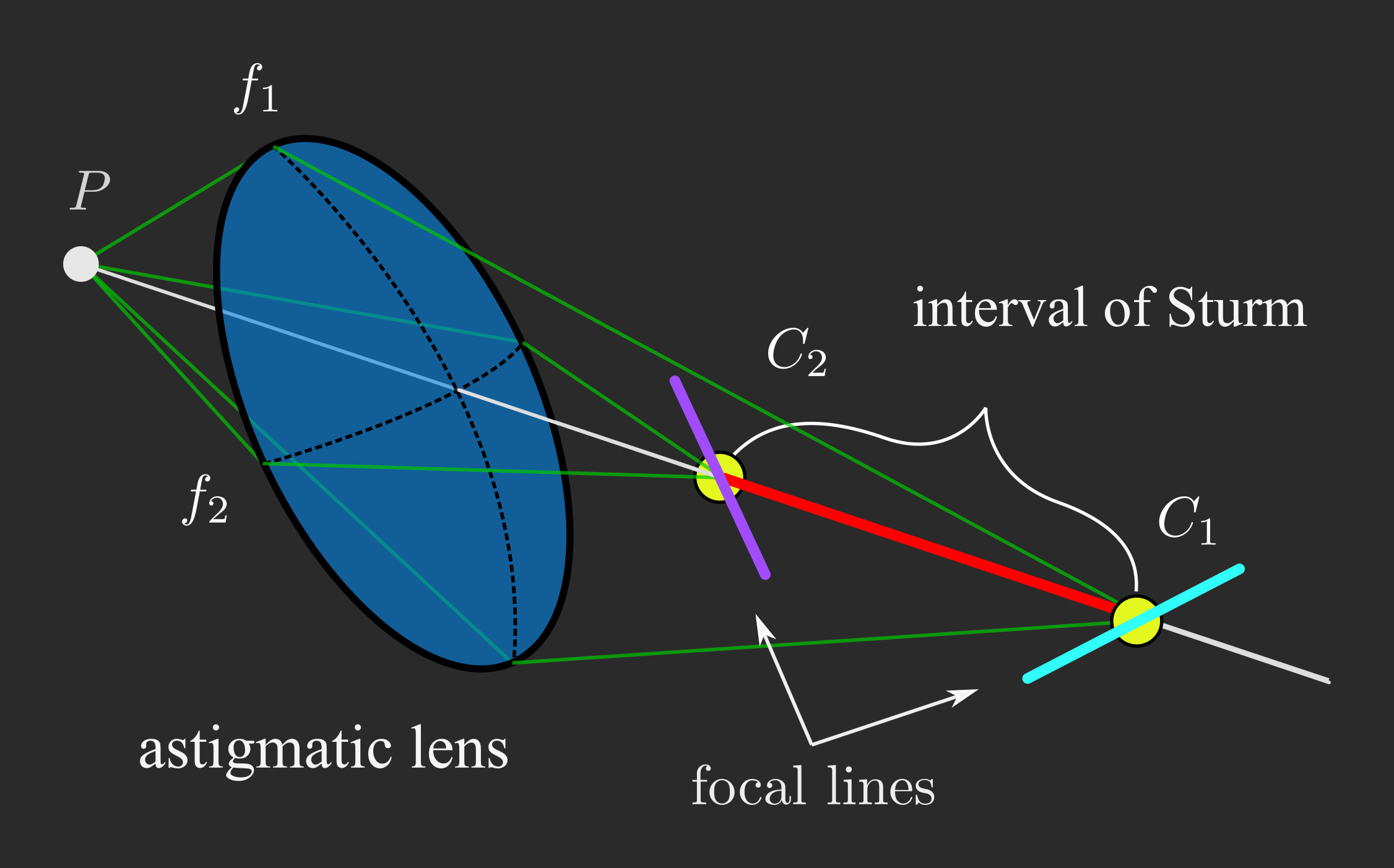

Even in the general case of unequal and non-orthogonal radii of curvature, the point forms two lines of focus separated by some interval. The points at each end of this interval are consistent when observed from different camera viewpoints.

Any 2D feature can form the basis for this approach. We match features between views, then employ eigenvalue decomposition to explain the feature observations in terms of the interval's parameters -- see the paper [1] for full details.

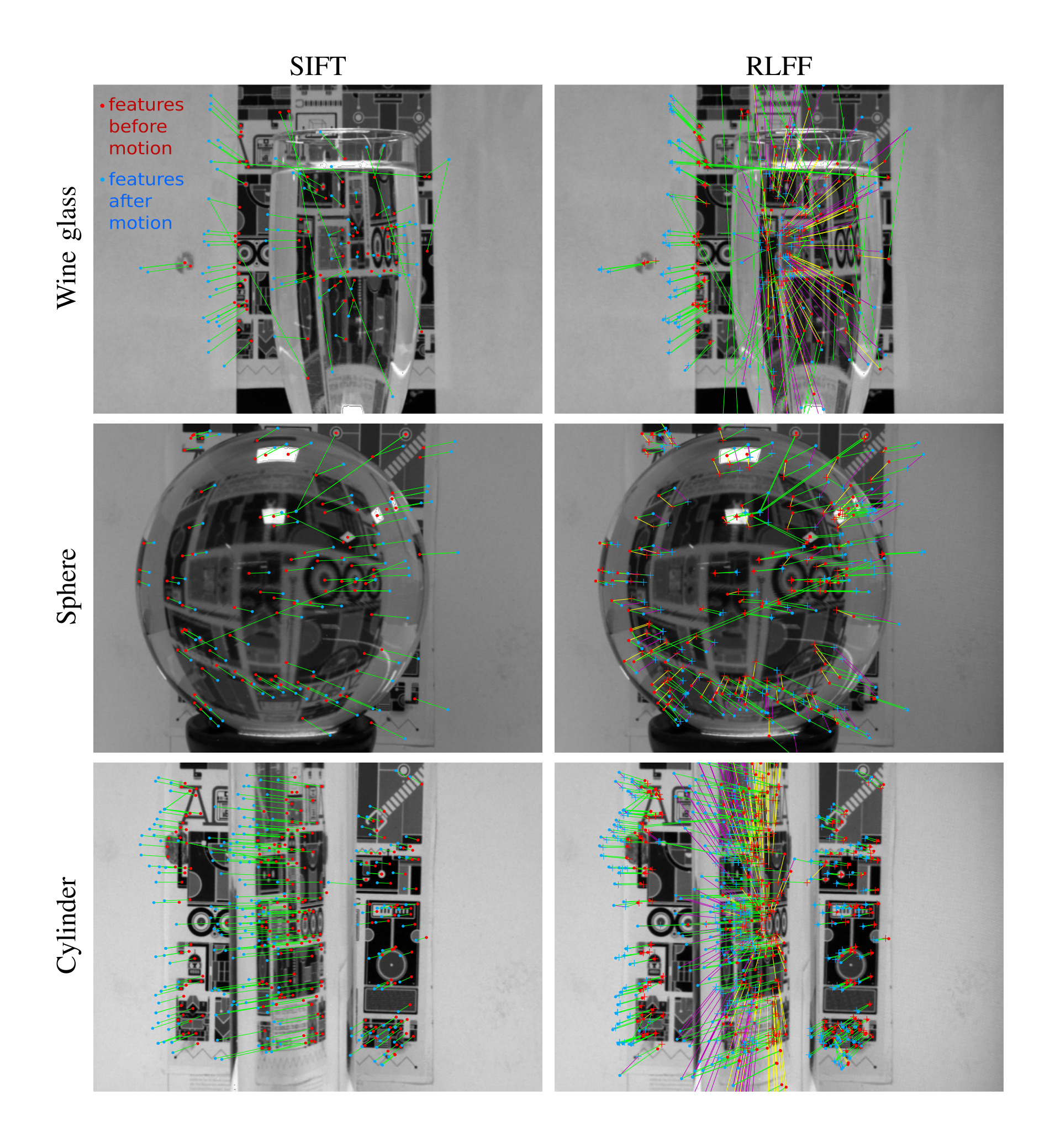

Careful inspection shows the interval associated with each RLFF replacing a single point associated with each SIFT feature.

Note that RLFF features generally exist in the space between objects, and not on surfaces as in conventional features operating on Lambertian scene content.

Note also that RLFF gracefully collapses to a single point when applied to Lambertian scene content.

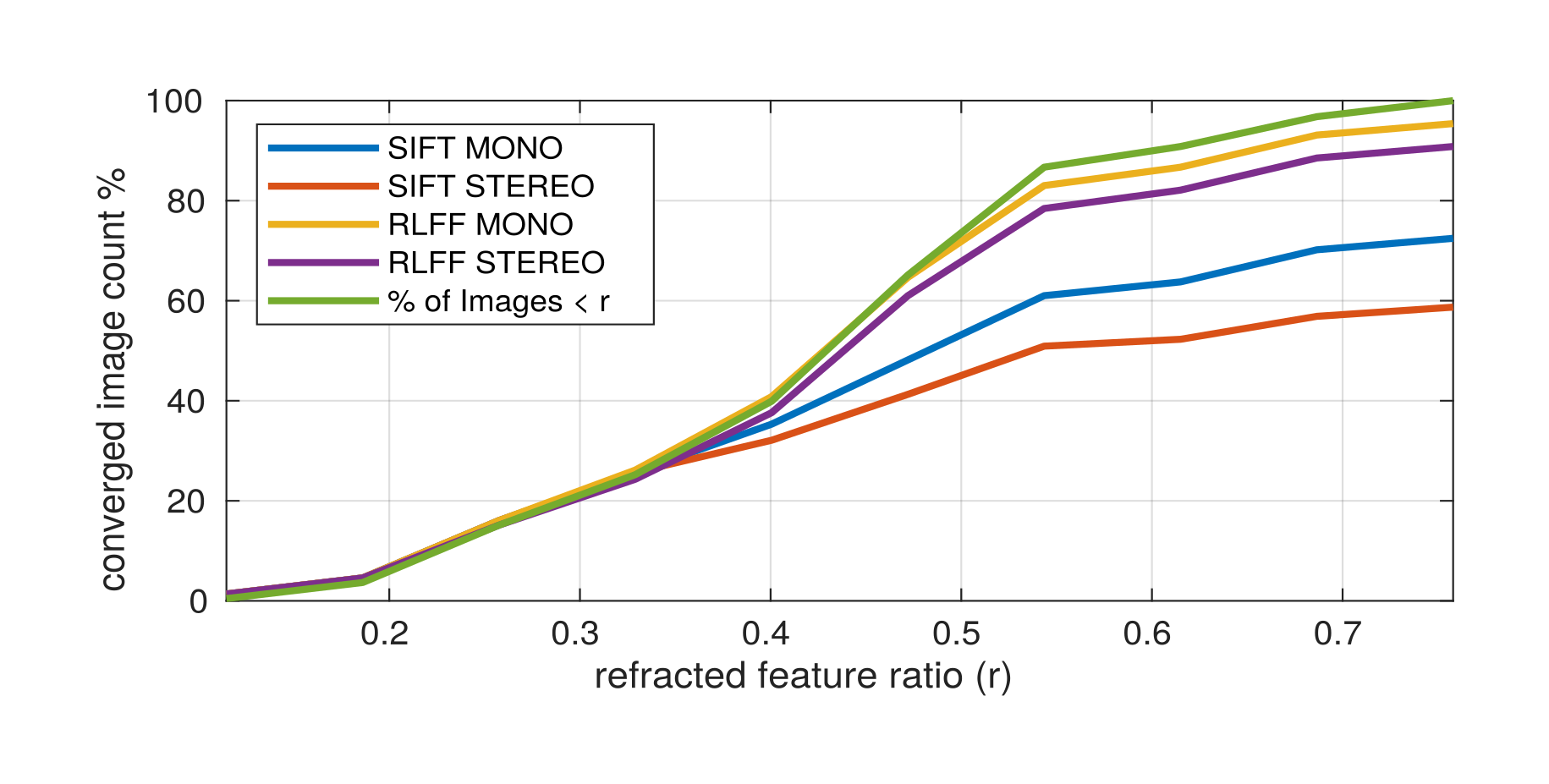

For full results please see the paper [1]. We evaluate convergence, camera trajectory accuracy, individual feature performance, and model completeness in structure from motion.

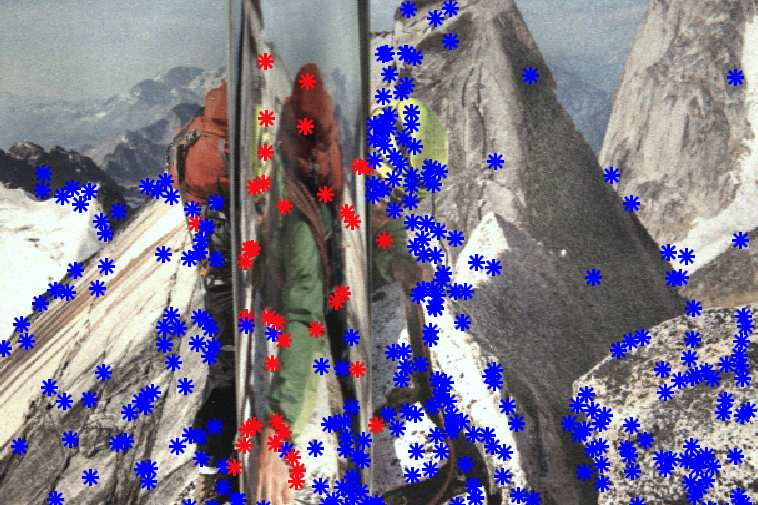

Here we see features identified as refractive. In [1] we show that rather than ignoring these features, we can make sense of them and use them to improve SfM in challenging scenes.

Citing

@article{tsai2021refractive,

title = {Refractive Light-Field Features for Curved Transparent Objects in Structure from Motion},

author = {Dorian Tsai and Peter Corke and Thierry Peynot and Donald G. Dansereau},

journal = {IEEE Robotics and Automation Letters ({RA-L, IROS}),

year = {2021},

organization = {IEEE}

}

@article{tsai2019distinguishing,

title = {Distinguishing Refracted Features using Light Field Cameras with Application to Structure from Motion},

author = {Dorian Tsai and Donald G. Dansereau and Thierry Peynot and Peter Corke},

journal = {IEEE Robotics and Automation Letters ({RA-L, ICRA})},

year = {2019},

volume = {4},

number = {2},

pages = {177-184},

month = apr,

organization = {IEEE}

}